Downloading different types of files from within a web browser or through a GUI application is easy. Almost all of us can do it without any issues. But, if you're a frequent Linux command line user and often need to download files from the internet, switching to GUI mode for the same can be cumbersome. Fortunately, there are several tools and commands to download files directly from the Linux command line. Some of these tools are like a Swiss army knife capable of doing several other tasks too. Let's take a look at some of the best and Most reliable file-downloading utilities for the Linux command line.

Although these tools are thoroughly tested and very reliable, I suggest avoiding downloading extremely large files. A good maximum range for the file size when using a regular broadband connection is 2GB.

Almost all of these commands and tools work flawlessly on all the popular Linux distributions. Their installation method may be different, but their working is the same. Let's check out each one of them.

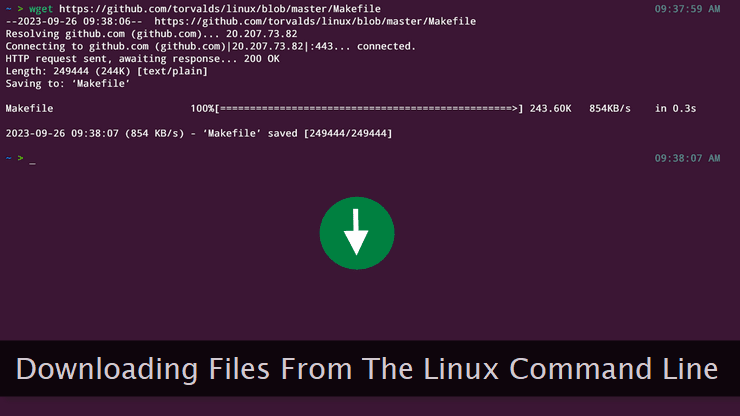

1. wget

Wget is one of the most popular file-downloading (transfer) utilities. It's frequently used by Linux system administrators, developers, and savvy users.

You can use the wget command right away on almost any Linux distribution as it comes preinstalled. Following are some of the prominent features of this file-downloading utility.

- Supports multiple file transfer protocols (FTP, SFTP, HTTP, HTTPS).

- Can be used to mirror entire websites in one go.

- Has the capability to resume stalled downloads.

- Supports non-interactive file downloads.

- Supports both HTTP proxies and cookies.

Let's see a couple of examples about how to use the wget command.

# Download the file accessible at the provided URL

wget https://github.com/torvalds/linux/blob/master/Makefile

# Login to the server with appropriate authorization

wget --keep-session-cookies --save-cookies example-domain-cookies.txt \

--post-data 'user=edmonds&password=passcode' \

http://example-domain.com/auth-routine.php

# Download the file behind the authorization wall

wget --load-cookies example-domain-cookies.txt \

-p http://example-domain.com/premium/reports-and-data.phpIn the first example shown above, we're downloading the Linux kernel building Makefile from the GitHub website. Nothing fancy here! Simply provide the URL as an argument and you're good to go.

The second example demonstrates the power and flexibility of this command. First, we're logging into the server by providing the credentials. We're also saving both the session cookies (if any) and the general cookies at the same time.

Thereafter, we're downloading the file reports-and-data.php that was hidden behind the authorization wall.

2. curl

This is yet another extremely flexible tool to download files from the internet. Like the wget command, this one too comes preinstalled with every Linux distribution.

It's used by millions of users and is known for its reliability. Following are some of the features that make it a must-have tool in the kitty of every Linux user.

- Supports FTP, FTPS, HTTP, and HTTPS protocols to facilitate file downloads.

- Can download multiple files in parallel.

- Can fetch files residing behind an authorization wall.

- Can work non-interactively while downloading files.

- A complete Swiss army knife to grab data from the internet.

Here's an example of downloading a file through the curl utility.

# Download the file and keep the original name intact

curl -O https://github.com/torvalds/linux/blob/master/Makefile

# Download the file and save it with a new name—locally

curl -o test-MakeFile https://github.com/torvalds/linux/blob/master/MakefileIn the first example given above, the file is downloaded and saved as the same name in the current directory. In the second example, the file is given a new name when saving it locally.

In the first command, we've used the -O switch, and, in the second example, the -o <local file name> parameter has been used.

I prefer curl over the wget command. Though the latter one is also a popular tool for downloading files, I like the prompts and help messages of the curl command—a bit more.

3. axel

If you frequently download large files from the Linux command line, axel download accelerator is your best bet. It divides the file into multiple chunks and downloads all of them —parallelly.

This speeds up the download speed significantly. Because it does not come preinstalled, you can install it manually using the following command on a Ubuntu machine.

# Install 'axel' on Ubuntu

sudo apt-get install axelThe following features of this file downloader make it a worthy competitor.

- Ability to download a file through multiple parallel connections.

- Ability to resume stalled or broken downloads.

- Max download speed can be specified to save bandwidth.

- Proxy server support.

- Ability to download a file quietly without any output.

Let's see some of the common axel command examples.

# Download a file

axel https://github.com/torvalds/linux/blob/master/Makefile

# Download a file to a specific location with a different name

axel -o /path/to/file/Makefile-copy https://github.com/linux/master/Makefile

# Download a file with 8 multiple connections

axel -n 8 https://github.com/torvalds/linux/blob/master/MakefilePay attention to the last example. Here, we're opening 8 multiple connections to speed up the download of a file. This file-downloading utility is lightweight and works like a charm.

It can also search for mirrors for alternative download sources. However, it may slow down the total time taken in a download as the command searches and tests the speed of all the available servers.

4. aria2

The next one is the aria2 file downloading tool. It's a blazing-fast utility to download different types of files through different types of protocols.

Quite similar to axel, this one too is capable of opening multiple download connections for a single file. On a Ubuntu machine, you can install aria2 using the following command.

# Install 'aria2' on a Ubuntu machine

sudo apt-get install aria2Let's take a quick look at some of its features.

- Multiple protocols are supported out of the box.

- Resumes stalled downloads—automatically.

- Multi-connection downloads.

- Can act as a full-fledged BitTorrent client.

- Leaves a light footprint on system resources.

Finally, it's time a quickly go through its file-downloading commands.

# Download a file from the internet

aria2c https://github.com/torvalds/linux/blob/master/Makefile

# Download a file using 4 parallel connections

aria2c -x4 https://github.com/torvalds/linux/blob/master/Makefile

# Save the downloaded file with a different name

aria2c -o Makefile-copy https://github.com/torvalds/linux/blob/master/MakefileThe download speed of this tool is comparable with the axel command. Both of them take almost the same time to download a large file.

To install it on distros other than Ubuntu, please refer to the documentation of the aria2 command.

5. scp

The scp (Secure Copy Protocol) is another tool in your arsenal to download files from remote servers. It comes preinstalled with almost every Linux distribution and works—flawlessly.

To download files through this tool, you should know the username and server address along with the file path on that server. Providing just a URL won't work. Let's see a couple of commands about using this tool.

# Copy file from a remote server to your specified path

scp username@servername:/path/to/remote/file /path/to/local/directory

# Copy a file 'records.dat' to the current directory

scp user@example.com:/path/to/remote/file/records.dat .Following are some of its important characteristics.

- Preserves timestamp of transferred files.

- Ability to recursively transfer directories.

- Ability to compress data during transfers.

- Can complete file transfer quietly without any verbose output.

You can use it to transfer files within a local machine or between two different remote servers.

6. rsync

This is one of my favorite commands I've used in the past for taking backups of WordPress websites. The rsync command can keep the remote and local copy in sync which is great for backups.

The sheer number of switches (options) available with this command is overwhelming. Similar to the scp command, this one too can be used to either transfer files within a local system or between two remote machines.

Following are some of the important features of this command, one must know about.

- Can seamlessly update and sync entire filesystems and large directories.

- It can preserve different types of file attributes while transferring.

- Can use various protocols and mechanisms to speed up file transfers.

And finally, let's see how we can use it to transfer files across devices.

# Transfer a file from a remote server to a local machine

rsync -av user@server-address:/path/to/remote/file.txt /path/to/target/directoryAdministrators can use this command within shell scripts and can use it to automate incremental backups with the help of cron jobs.

Conclusion

When it comes to completing a job on a Linux machine, there are several ways to do it. The GUI and command line versions are available for almost every task, and file download support is no different.

The six file-downloading tools explained above are popular and used by millions of Linux users. You can carefully try each one to select the one meeting your requirements.